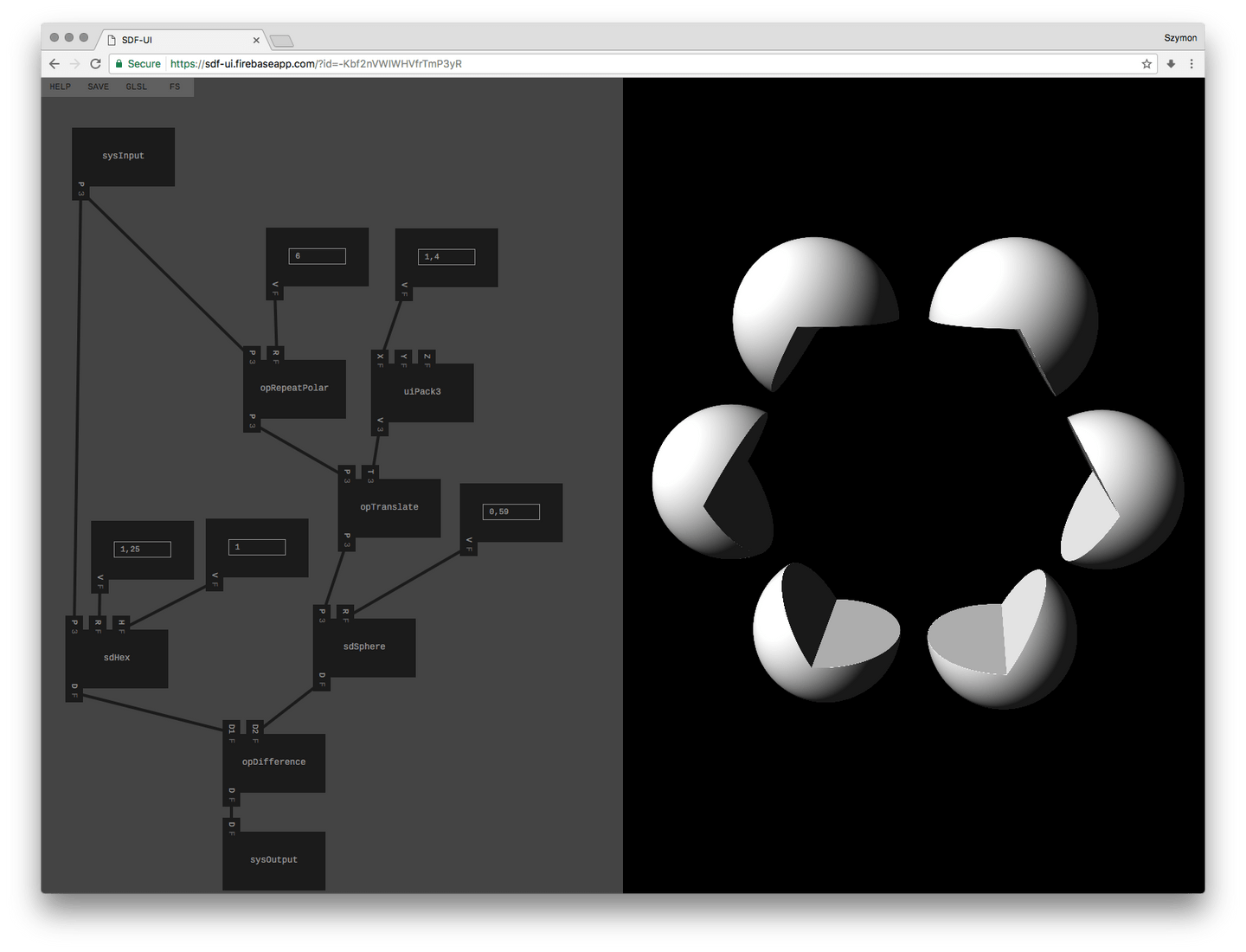

SDF-UI is a node-based DSL for generating complex shapes using SDF, GLSL and WebGL, that I've build in January 2017. You can read more about it here: projects/sdf-ui.

I have a quite common problem of not finishing personal projects. I tend to start a lot of them, and once the main problem is semi-solved (or I get bored), I move on to something else, leaving no trace of having anything done. I usually learn something in the process, but it would be much nicer, and hopefully more useful, to have something to share.

In 2017 I decided to work on a single personal project every month, with strong incentive to publishing it at the end of that month, and SDF-UI is first project of that kind.

You can read more about SDFs and why they are cool on the project page and all over the internet, so I'm going to skip that part.

I was experimenting with this technique for generating images in November last year, and one of the biggest problems for me was that after a bit of fun, modeling function would turn into a mess similar to that:

return vec2(

max(

-sdBox(p, vec3(r + .01, r * 2. - c, r - c)),

max(

-sdBox(p, vec3(r - c, r * 2. + .01, r - c)),

max(

-sdBox(p, vec3(r - c, r * 2. - c, r + .01)),

max(

-sdSin(p - vec3(0., r - 1.0, 0.)),

sdBox(p, vec3(r, r * 2., r))

),

)

)

),

0.0);Obviously, this could have been written much nicer, but then all the parts of the scene must have been named (box1, box2, etc...), and this additional thing to keep in mind doesn't compose well with play mindset, at least for me.

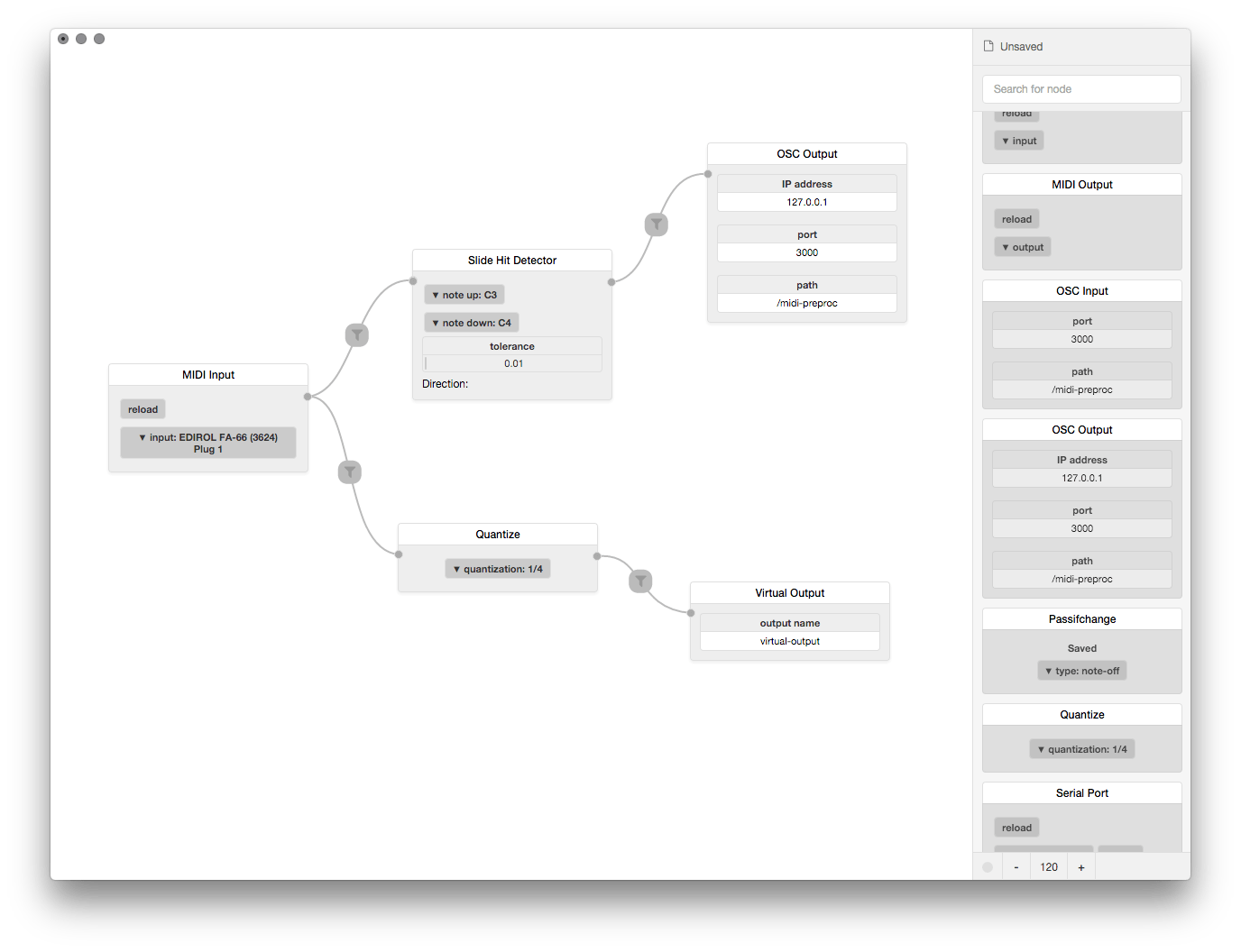

Second reason why I wanted to work on this is that I've already built few node-based UIs, one for building interactive movies, and then two much more interesting ones. First one is MIDI preprocessor for HLO which allows users to modify MIDI messages on the fly and route them between hardware (MIDI keyboards, Arduinos, etc..) and software (OSC, virtual MIDI).

Second project is SAM 2.0 for SAM Labs - a visual programming language for connecting different bluetooth modules together, aimed at kids. Unfortunately both of these projects aren't finished yet, and it doesn't seem they will see the light any time soon.

When working on projects like that, I like to start with the thing that I know I have no idea how to do.

In this case that was turning the graph structure into linear shader code.

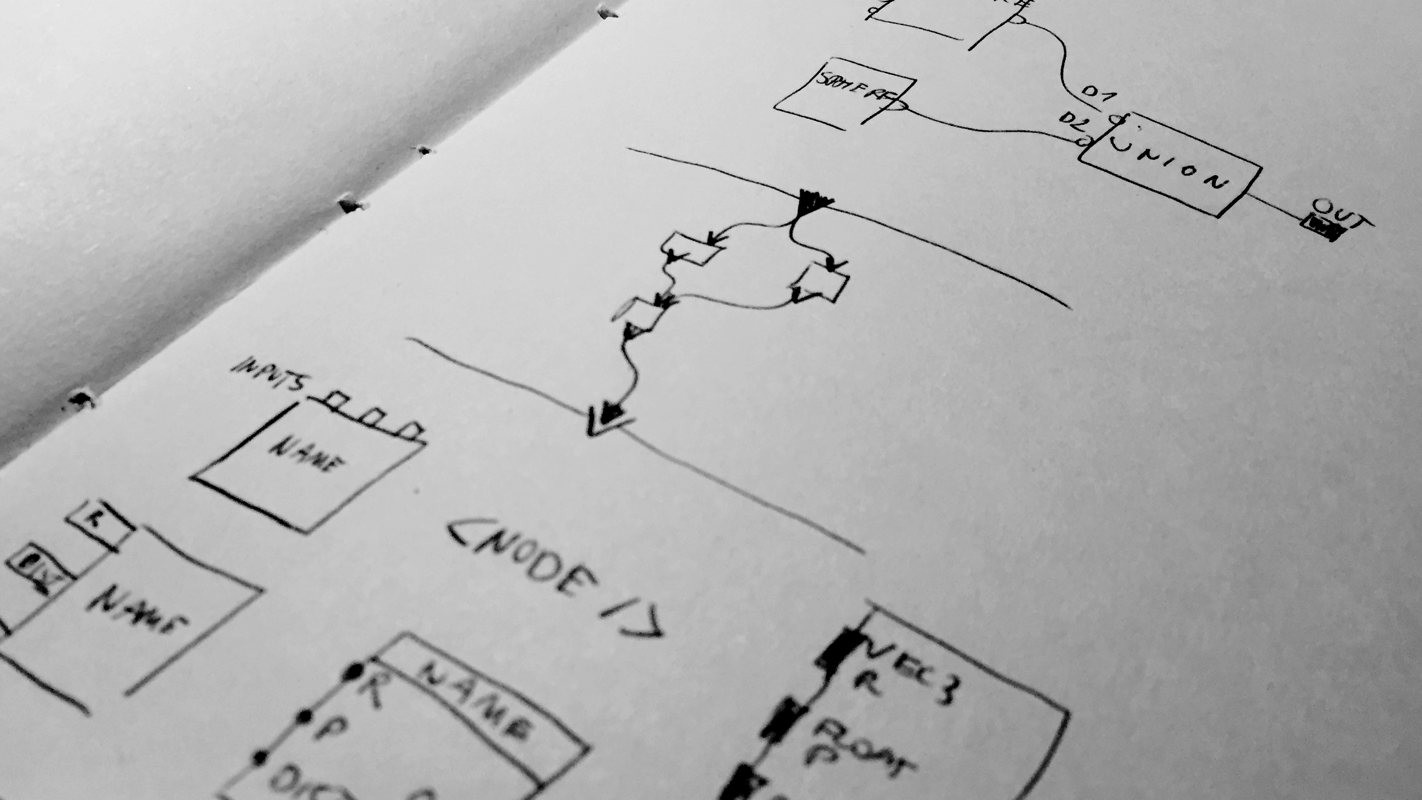

I started with sketching ideas for graph structure:

- nodes represent GLSL functions (

sdSphere(),opRepeat(), etc.) - nodes can have multiple inputs (function arguments), but only single output (

float,vec2,vec3, etc.) - node output can be connected to multiple inputs, but node input can only have one incoming edge (if you imagine input as function argument, there's no easy way of putting two values there at the same time)

Basic JSON representing this structure looked like that:

{

"nodes": {

"n001": { "id": "n001", "type": "sdSphere" },

"n002": { "id": "n002", "type": "sysInput" },

"n003": { "id": "n003", "type": "sysOutput" }

},

"edges": {

"e001": {

"id": "e001",

"from": { "id": "n001" },

"to": { "id": "n003", "inlet": "d" }

},

"e002": {

"id": "e002",

"from": { "id": "INPUT" },

"to": { "id": "n003", "inlet": "p" }

}

}

}This would represent simple connection: sysInput -> sdSphere -> sysOutput.

I kept this JSON in a file, and started working on ideas how to unwind the graph.

I ended up with solution where I start from sysOutput node (I know this is the output of the system), and build up by finding what is connected to its input, then by what is connected to that node inputs, and so on.

This recursive function is in compile-graph file.

Walking the graph backwards is unfortunately not enough to build the shader code — we need to store and access GLSL code that given node type represents: sdSphere has to be turned into sdSphere(p, pos, r) etc.

Second part of the system is a function which generates class for node from simple spec file. All nodes are created like that:

export default generateNode({

spec: {

inlets: [

{ id: "p", type: "vec3", value: "vec3(0.0)" },

{ id: "r", type: "float", value: "0.5" },

],

outlet: { id: "d", type: "float" },

},

frag: `

float sdSphere(vec3 p, float s) {

return length(p) - s;

}`,

generate: ({ p, r }) => {

return `sdSphere(${p}, ${r})`;

},

});specpart describes inlets and outlet of that nodefragis part of fragment shader that will be injected into final GLSL codegenerateis function which receives all inlet values and returns string that will be injected into modeling part of the GLSL code

You can have a look at internals of generateNode.

I think most interesting part is little trick with overwriting toString of returned class.

This allows me to simply plug instances of different classes into each other exactly as if I was concatenating strings.

For example, in sdSphere, if r is connected to mathSin which is connected to sysTime, it will first run toString() on mathSin, which in its generate function will run toString() on sysTime which will return string time (uniform, but that's not important), thus turning this whole chain into sdSphere(p, sin(time)).

On the other hand if r isn't connected to anything it will grab default value for this input — string 0.5 turning final code into sdSphere(p, 0.5).

Neat, right?

One thing I wish I could spend more time on was designing the actual DSL that I've created. I tried to keep an eye on things happening organically — I ended up only using float and vec3 types, and tried to keep naming and conventions consistent — nodes generating surfaces always have point p as first input, distance is always named d, etc.

Once GLSL generation was in place, I started working on the UI.

One month seems like a long time, but I only get 1-2h a day tops for working on my own things, so I had to be pragmatic if I wanted to finish this in time.

I chose stack that I know well: react, react-gl, redux and immutable.

I augmented initial JSON with x and y positions of each node, and started drawing things on screen — first nodes in its proper positions, then connections between them as SVG lines. Dragging is performed by dynamically adding mosemove event on mousedown, and removing on mouseup — something I've found to be most reliable. You can look at onMouseDown in editor-node file.

One of tricks I came up with, inspired mostly by om, is to keep almost everything in the redux store.

This gives us nice property of being able to dump it in localStorage on every change, and load it from there every time the app is loaded, which gives us something resembling cheap code hot-replace.

It's still easier to set up and manage than HMR in my experience.

// check if we are in "debug" mode (http://localhost:3000/?debug)

const isDebug = window.location.search.indexOf("debug") >= 0;

let parsed;

if (isDebug) {

try {

parsed = JSON.parse(localStorage.getItem("state"));

} catch (e) {

console.error(e);

}

// helpful global function for clearing the state

window.clearState = () => {

localStorage.setItem("state", null);

window.location.reload();

};

}

const initialState = parsed || defaultState;This helpful thing allowed me to play with design and debug without having to re-create the state every time from scratch.

Second nice redux trick is keeping all actions in a hash-map, it's easy then to find typos and unhandled actions:

const actions = {

ADD_NODE: addNode,

MOVE_NODE: moveNode,

DELETE_NODE: deleteNode,

};

export default (state = initialState, action) => {

if (actions[action.type]) {

state = actions[action.type](state,\ action);

} else {

console.warn(`No handler for action ${action.type}`);

}

return state;

};I've finished this project in exactly 30 hours of coding, which I think is pretty good for what it can currently do. It's a nice feeling when things start clicking together, and at the end I was able to add bunch of different nodes quickly, because the architecture has been prepared for that since the beginning.

There's obviously a lot to improve on, but this has never been intended to be a finished product, but more of an inspiration and sample code for people wanting to experiment with building their own node-based DSLs.

You can play with live version here: https://sdf-ui.firebaseapp.com/, it's good idea to open HELP if you are visiting for the first time.

Code is open sourced and available with MIT license here: szymonkaliski/SDF-UI.

You can read more about process behind making this project on the blog: building SDF-UI.